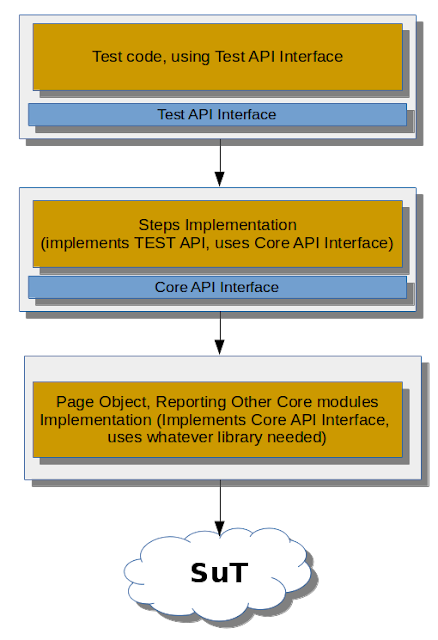

Test automation framework architecture. Part 2.2 - Two-layered architecture

As we've learned in previous posts of the series - Layered (also known as Tiered) architecture is a de-facto standard of the industry. There're two major variations of this architectural pattern that one should be aware of: 3-layered architecture (described here ) and 2-layered architecture . This post is going to concentrate on 2-layered architecture . 2-layered architecture is typically seen in UI-specific test automation frameworks. The key feature of this pattern is the absence of Business-layer, so most of the business-related logic are actually to be put into Page Objects. In order to avoid " The God class " anti-pattern and still be able to contain business-related logic in Page Object, one usually uses libraries or frameworks that allow simplifying DOM-elements location, thus letting Page Objects to concentrate less on locating elements and more on business-logic itself. The good thing in this architectural pattern is that it actually allows you...